KSI Adaptive Management Learning Brief #1[1]

January 2022

Introduction:

This brief is the first in a set of four learning documents that capture and share key lessons the Knowledge Sector Initiative (KSI) has learned in its efforts to implement an adaptive management approach during Phase 2 of its operations (2017-2022). There are an increasing number of toolkits and templates available for those wishing to advance adaptive approaches in their work, their organizations or their partners.[2] The aim of these briefs is not to replicate these. They are, however, intended to be practitioner-focused and while there is some reference to the broader literature, our aim is to provide a succinct description of the KSI approach, reflect on a limited number of key areas that have presented challenges, even for those intentionally aiming to adopt these approaches, and share a set of lessons we hope will be useful to others attempting to implement similar adaptive approaches.

This first brief lays the foundation for the practical focus of the three briefs that follow by clarifying how the program actually understands the idea of adaptation. After recognizing the origins of the KSI approach, we identify three specific ways in which that understanding became more nuanced as the program progressed. The second brief then explores the hard systems and processes the program put in place for adaptation, including greater detail on monitoring, evaluation and learning systems. However, it is worth emphasizing that a focus on these tools can obscure or minimize the importance of those working within the system. Therefore, the other two focus on lessons learned regarding the human side of adaptation, including resourcing, communication and relationship management; and leadership of adaptive programs, including the distribution of leadership, leaders’ roles and responsibilities, and information needs.

The intention to adapt: Origins and influences

KSI entered its second five-year phase with a clear intention to make adaptive management a defining feature of its operations. With five years of Phase 1 experience under its belt, the program had grappled with the challenges of complexity in institutional reform processes and the path to progress in some of its most promising achievements, such as support to procurement reform, reinforced key messages emerging in the literature on adaptive management. The Phase 2 Program Implementation Strategy stated this explicitly, laying out an implementation strategy that would integrate monitoring, evaluation and learning; use flexibility in program structures and process to enable adaptation; retain a flexible emerging priorities fund; and prioritise relationship management. As the lessons documented in this set of briefs makes clear, many of these original intentions have proven to be as important as anticipated, while other complementary lessons have emerged in implementation.

KSI built not only on its own experience, but the wider research and learning of the team in designing the Phase 2 systems and processes, and experiences and insights partners like ODI sought to share from other contexts though their role in the program. KSI’s ongoing engagement with the Australian Department for Foreign Affairs and Trade (DFAT) and other DFAT programs in Indonesia that have implemented and reflected upon ideas about learning and adaptation in their own work provided further sources of information for the KSI team.

Understanding adaptation in KSI: timing and scale

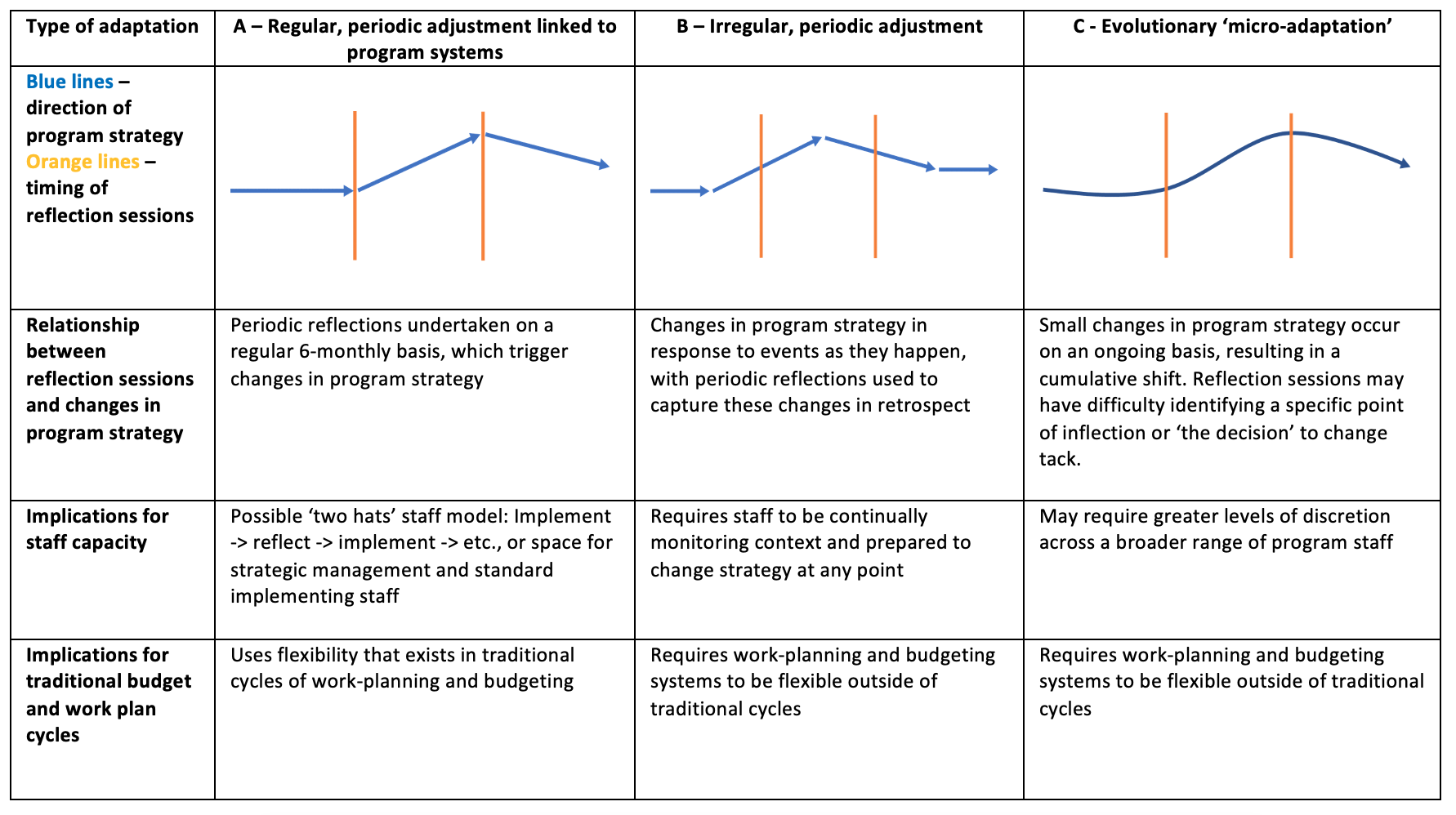

Early in the action research that accompanied and supported KSI’s adaptive management processes, the team identified a number of different types of adaptation that might be observed (Figure 1). The intent of introducing this typology was to develop a better understanding of what different forms of adaptation require and how KSI operates with respect to each. They are not mutually exclusive, nor is there an implied judgement as to which is preferable or measurement against one established ‘best practice’.

Figure 1: Types of adaptations

As the program reaches the conclusion of Phase 2, it is now clear that all three types were relevant in practice. Examples of type A adaptation include decisions made and executed through six-monthly and annual processes such as exits to the program’s work on KRISNA and policy analysts (Box 1 below). Type B adaptations include a series of adjustments made in response to the emergence of COVID-19 in the early months of 2020, shortly after the 2020 workplan had been agreed, and before any subsequent formal review in June of that year. Type C changes, typically tactical changes undertaken within initiatives in the course of adaptive delivery, were fairly widespread, but were notable significantly in the work on formal institutional reform to knowledge governance and financing.

Understanding adaptation in KSI: flexibility and adaptation

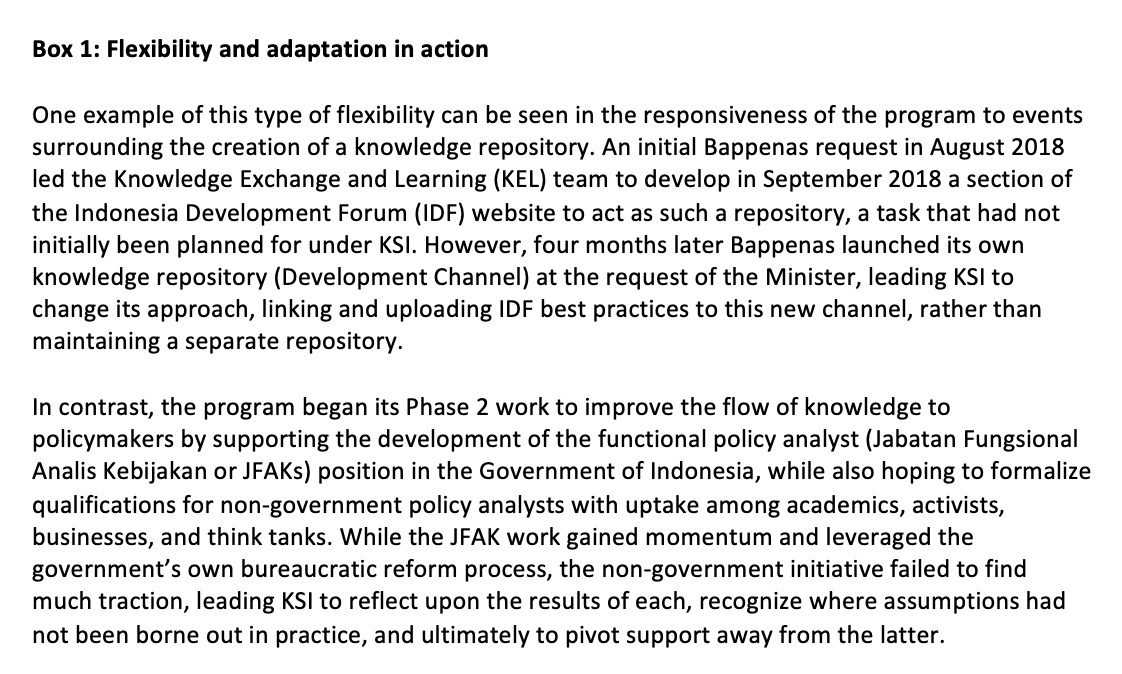

In addition to this typology of timing and scale, an internal review of adaptation in the early stages of Phase 2 pointed to another distinction that helped the program refine its thinking about the prompt for change and the posture of a program. In that early review, many of the changes that had been recorded by the team involved its ability to responding to partner requests, or more broadly to changes in the context. In this sense, the program was flexible in important ways (e.g. introducing new workstreams, amending budgets and outputs, etc.) that allowed it to pursue emerging opportunities, demonstrate responsiveness and build relationships (Box 1).

While this responsiveness to changed circumstances was valuable, it was ‘not the same thing as the purposeful experimentation and course correction that is required because of complexity. A limitation of what might be called the flexible blueprint approach… is that [programs] may ‘flex’ in response to changes in external circumstances, but they do not learn. They do not change course in a decisive way when it becomes clear their initial strategies are not working’ (Booth et al., 2018: 9).[3] However, in other cases, KSI course correction was in fact prompted by reflections on the effectiveness of its own efforts and the assumptions that had underpinned those efforts (Box 1). This too provided valuable contributions to the effectiveness and efficiency of implementation, but it required different skills and processes from the team.

This distinction between flexibility and adaptation is relevant not simply as an academic exercise in defining whether a change in the program was prompted by an ‘external’ change in context or a reflection on whether existing theories of change and corresponding strategy are being borne out in practice. It also helped to trigger a reflection on the posture of the program, asking whether or not a program can not only react to changes in the external environment, but also proactively seek to interrogate its own practice and progress.

A proactive program posture brings immediate benefits of stronger adaptive systems by leveraging monitoring and evaluation data the program collected alongside the horizon scanning and context analysis. Perhaps just as importantly for the overall prospects for adaptive management, it can be an important factor in shaping the relationship between donors and implementers. A proactive adaptive posture is not always easy to maintain in the face of performance targets, organizational branding and reputational pressures, but it is the sign of a confident program, which shapes perceptions on the donor side and builds trust. When you seek to identify where things are not progressing, it increases the credibility of claims that things are progressing.

Understanding adaptation in KSI: Changes over the program life cycle

One of the attractive qualities of adaptive management approaches is that they can free practitioners from many of the rigidities of pre-planned projects.[4] KSI’s experience echoes this principle, and yet it also guides us to acknowledge the ways in which the life-cycle of a program can still have important implications for how it operates, what information is available, and the prospects for adaptation.

There is a real risk that programs tend to talk about adaptive management in a rather static way (e.g. ‘here is our adaptive management approach’), without respect to phases of a program cycle that continues to be relevant to most donor supported efforts. Many of the systems and processes that have been developed and disseminated to support adaptive working are designed to work at what we might call ‘cruising speed’ in the middle of implementation, rather than the early days or when the end of the program begins to feel imminent.

In some respects, the relative prevalence of ‘flexibility’ over ‘adaptation’ noted above was perhaps to be expected early in Phase 2 implementation. At that time, many initiatives had not yet had sufficient opportunity to explore and progress, and thus the information required for ‘course correction’ had not yet worked its way into the system. Of course, it can be easy to fall victim to the temptation to use incomplete information to support an ongoing mantra of ‘wait just a little bit longer’ or ‘let’s give it one more cycle of implementation and reflection’. In reality, rarely is it the case that the available evidence is complete and unanimous in its instruction, and the time will come when hard decisions need to be made on the basis of that good, but likely imperfect information.

In the meantime, the early stages of a program are primed for partners of all sorts to make requests and for a program to both pursue those that may seem like good opportunities and even make small investments in the name of developing relationships. Optimism is high and the end of program seems very far away indeed. In other words, the early years of an adaptive program can seem almost boundless in the opportunities they offer and which might have a plausible link to the desired end of program outcomes.

In contrast, as the end of a program approaches more doors may be closing than opening (with implications for morale that we explore in the brief on the human side of adaptive management). The pressure to demonstrate results ratchets up and the time for investments in relationships to bear fruit in terms of end of program outcomes shrinks. New tensions can arise. For example, programs tend to have strong incentives, built into M&E systems and indicators, to maximize the number and scope of policy changes to which they are able to report they have contributed. As implementation wraps up, there is the potential for the desire to achieve something within the program timeframe to pursue (or push a partner to pursue) a second-best alternative when more significant changes might be possible with a longer time horizon. In both this case and the case of KSI influence noted above, the program could then be confronted with a choice between more immediate returns and laying the groundwork for future changes.

Particularly in the last year of implementation, this natural evolution towards consolidation, reporting and sustainability forced the program to be quite explicit about the limited space remaining for adaptation, revising theories of change and development of new strategies. It did not diminish in any way the value of continuing to ask whether the current distribution of human and financial resources was appropriate, but it did force an on-the-fly rethink of how adaptive management thinking applied, what it had to offer the program, and what changes to systems, processes and people would deliver the greatest benefit. Expectations for what an adaptive approach looks like, should then acknowledge the differences between the beginning, middle and end of a program.

Conclusion

Ultimately then, while the intention to adapt was critical, it was only a first step in a process of building understanding, not only of the complexities of reform, but also of adaptation itself. Through experience and reflection it became clear that adaptation could take different forms, each of which would require different things of systems and teams; it became clear that responding to changes in context was a different process than adaptation based on the results of implementation, again requiring different skills and processes; and it became clear that the practice and expectations of adaptation need to evolve across the program cycle.

Hopefully this set of learning briefs helps provide a few short cuts for others taking a similar journey, but regardless of the growing literature, evidence and tools for adaptation, we expect that for the foreseeable future there will still be a need for future programs to refine their approach as they implement and learn. Adaptive adaptation sounds a bit silly, but as the development community increasingly moves to integrate adaptive management, we would do well to do so with modesty and an open mind, ready to reflect, learn and change course as necessary.

-----------------------------

[1] These briefs have been written by Daniel Harris with the support of the KSI Performance, Monitoring and Evaluation team, and are based on a program of action research that followed and informed the program’s adaptive management approach.

[2] For example, see the comprehensive ‘how-to’ guidance provided in the Abt toolkit produced in 2021.

[3] Booth, D. Balfe, K., Gallagher, R., Kilcullen, G., O’Boyle, S., and Tiernan, A. (2018) Learning to make a difference: Christian Aid Ireland’s adaptive programme management in governance, gender, peace building and human rights. London: ODI.

[4] Cole, W. and Tyrrel, L. (2016) Dealing with Uncertainty: Reflections on Donor Preferences for Pre-Planned Project Models. San Francisco: The Asia Foundation.